Courrier des statistiques N6 - 2021

A service at the heart of database quality Presentation of an ATMS prototype

Databases should ideally evolve over time along with changes in their environment according to their uses. Taking these changes into account has a strategic impact on administrative data quality, and therefore on the statistical information systems using them. In order to help manage the transformations resulting from the observable reality affecting data, this article proposes an innovative, operational approach that can be generalized to any relational database management system.

Thanks to research improvements in data quality, studying anomalies and their management gave rise to an original prototype, called ATMS (Anomalies & Transactions Management System). This service allows tracking and process anomalies, supporting the back tracking method: in a preventive approach of data quality, the method intends to structurally improve quality at the source, and its implementation provides a significant return on investment. The characteristics of the ATMS prototype are combined with the use of data quality tools in curative approaches, offering new perspectives for statistical information systems.

- The “closed world assumption” at the heart of a fluctuating reality

- Significant challenges in respect of administrative databases

- Academic work on data quality

- Box 1. Data Quality Tools

- When studying anomalies improves data quality

- Deterministic and empirical data

- Identifying and interpreting the evolution of the observed reality

- Anomaly typology trial

- Three time scales in constant interaction

- Anomalies and Transactions Management System: a functional overview

- Thomas Redman’s data tracking

- Back tracking: from orthogonality to interaction

- The relational ATMS: a dynamic between data in production and anomaly management

- The main database and the ATMS: both hemispheres of the world represented

- Data routing

- Implementation of the prototype and outlook

- Box 2. Some technical details on the ATMS

- Legal references

“Evolution is inseparable from structure because the whole itself is less a fixed system than the provisional establishment of a movement, the intelligible order of a trend […]”.

Raymond Aron (La philosophie critique de l’histoire [The Critical Philosophy of History], 1969)

The “closed world assumption” at the heart of a fluctuating reality

Statisticians are using administrative and transactional data more and more, either on their own or in addition to other sources (surveys, etc.) (Ouvrir dans un nouvel ongletHand, 2018). Therefore, assessing their quality is also a strategic requirement for statistics, whether they are public or not.

Ideally, a database should evolve along the interpretation of realities it allows to be understood. Normative realities are indeed shifting. Thus, after the attacks of 2015 and the following years, the police files on the issue, both in France (Chapuis, 2018) and in Belgium (Ouvrir dans un nouvel ongletAgence Belga, 2018), experienced numerous anomalies: potential duplicates, “false actives” despite a dismissal of the case, early deletions, interpretation difficulties, etc. These have accumulated as a result of the emergence of new threats categories, as well as the urgency these sensitive data had to be processed. Such developments are constantly at work in administrative databases, which are important sources for statisticians.

Every well-designed operational database is based on a hypothesis that of a “closed world”: definitions domains specify the set of values allowed within the database model or scheme (integrity constraints); “area rules” can also be expressed in the application code and thus contribute to the definition of data. Therefore, a value that is not included in the definition domain is considered false and must be rejected from the database.

However, at the level of millions of records and hundreds of fields and information flows, the phenomena emerging in the field are not immediately taken into account in databases. Information is build up gradually, through human interpretation and without any absolute reference frame.

Apart from the basic definition domain that meets the closed-world hypothesis, “normative reality” evolves continuously and unpredictably, ignoring any rule of deterministic causal explanation. Additionally, in the case of a database as an instrument of action on reality (in the administrative, medical, environmental, military sectors, etc.), these issues are fundamental and affect data quality.

However, we can better take these phenomena into account at an operational level, at the heart of the information system. This is especially made through the interpretation of anomalies and their processing.

Data quality research is concerned with the world of anomalies for operational purposes. Their analysis resulted in the implementation of a service called ATMS, Anomalies & Transactions Management System. This system allows the history of anomalies and their processing to be tracked over time based on indicators deemed strategic and variable according to the context of use. This is an innovative concept that has already been experienced and tested in practice and that is based on a method known as back tracking.

Significant challenges in respect of administrative databases

Many large-scale administrative information systems are affected by data quality, in France for example, the Nominative Social Declaration (Déclaration Sociale Nominative – DSN). Within this Declaration, any control deemed essential is blocking (Renne, 2018) but some controls are “non-blocking” in order to avoid slowing down the process of gathering or collating declarations. The latter require manual post-processing, which database managers try to streamline and raise a “cost-quality” trade-off (Renne, 2018). The example of the DSN also shows that database quality requires that special attention to manage regulatory changes (Humbert-Bottin, 2018).

In Belgium, the LATG database in the late 1990s, and its modernized heir, the DmfA database at the beginning of the 2000s, were “case studies” of data quality research (Boydens, 1999; Ouvrir dans un nouvel onglet2018) given their scale. The DmfA database currently allows for the annual collection and redistribution of 65 billion euros of social security contributions and benefits throughout Belgium. It is subject to quarterly legislative amendments and has been a large-scale integrated information system since 2001, including features that are functionally close to the DSN. It represents one of the platforms counting many statistics on employment and salaries in Belgium. Over the last 20 years, research has also focused on the quality of many other transactional databases that are the source of statistical output.

Data quality is also an issue in the context of cross-functional administrative registers, as those planned in Germany. These are indeed based on numerous interconnections that may raise important semantic issues.

The rate of adaptations made to an information system varies according to the aims: administrative or medical databases, for example, as tools that can have an impact on reality, on the one hand, or statistical information systems, on the other, as instruments to observe or support decision-making. For the former, the rate of change will ideally be fast. For the latter, the rate of structural change is much slower or even non-existent (e.g. in the case of survey results) so as to ensure long-term comparability, while the timeliness and quality of the sources that feed them will matter.

In this context, statisticians often wonder what is best moment to take “the picture” in order to extract data from an administrative information system, regarding which they experience a type of loss of control (Rivière, 2018). Thus, work achievements on data quality offer constructive possibilities.

Academic work on data quality

The quality of a database refers to its relative suitability regarding the purposes it was designed for, under budgetary constraints. Research in this field has been ongoing since the 1980s (Ouvrir dans un nouvel ongletMadnick et alii,2009) alongside companies need to have adequate addresses and contact information in their customer files. This is how data quality tools appeared (Box 1), a field that developed very quickly internationally and which remains very active (Ouvrir dans un nouvel ongletHamiti, 2019).

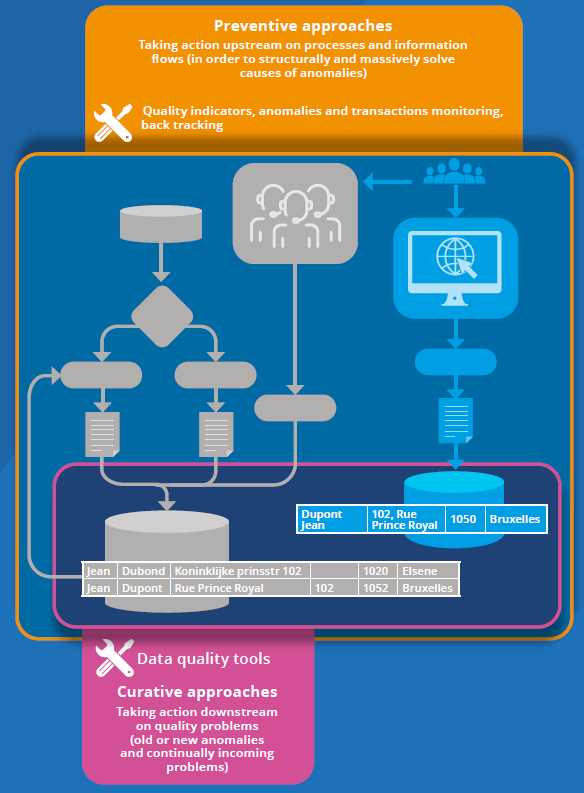

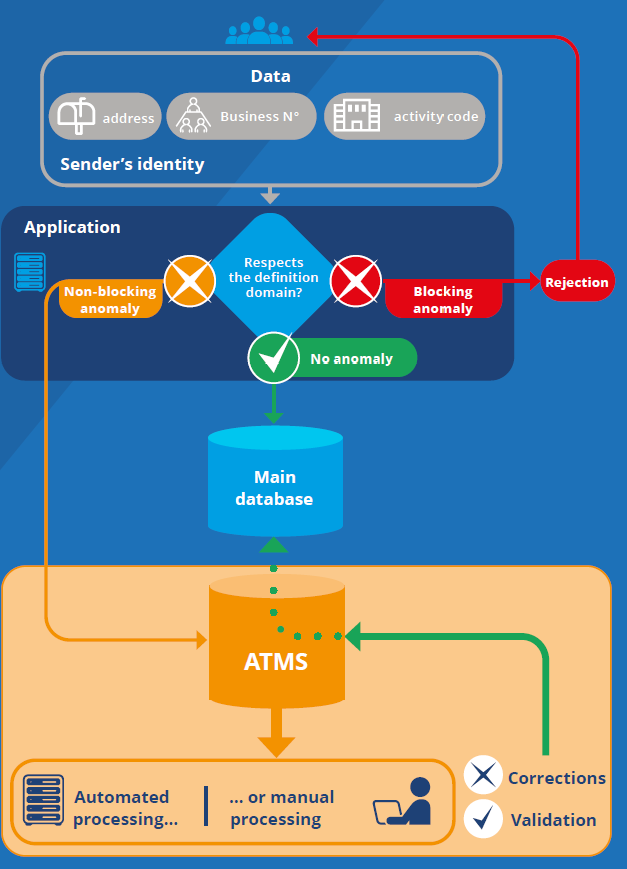

As part of a “curative” approach (Figure 1), the purpose of data quality tools, based on thousands of algorithms that are regularly enriched, is to detect formally identifiable quality problems (suspected duplicates, etc.) that are already present in the databases and to solve them afterwards semi-automatically. These tools also allow for the automated management of problem cases “online”, for example when entering a website.

However, if we only act downstream, we do not structurally solve the cause of these problems which will constantly recur. These may actually be caused by design flaws, by the evolution of the reality represented, or by the flows and procedures that feed databases (for example, unnecessarily redundant processes that systematically generate duplicates). Therefore, without further action upstream, data quality tools are destined to be used ad infinitum. However, these remain essential, as users do not necessarily have access to the flows and procedures that produced the data they are using.

Therefore, in addition to “curative” interventions, “preventive” approaches are essential in order to identify and structurally resolve the causes of anomalies at the source (Figure 1).

The Free University of Brussels (Université libre de Bruxelles) has had a course dedicated to data quality since 2006, presenting both types of approach (Boydens, 2021). Some of the research is directed towards preventive methods (Boydens, 1999; 2010; Ouvrir dans un nouvel onglet2012; Ouvrir dans un nouvel onglet2018; Bade, 2011; Radio, 2014; Ouvrir dans un nouvel ongletDierickx, 2019). Current work in the field of data quality research also focuses on record linkage-type algorithms (Batini and Scannapieco, 2016). The latter are used by data quality tools in the context of “comparison and duplicate elimination” operations (Box 1).

Many studies take a deterministic view: they consider the deviation of databases from reality in terms of formal inaccuracy/accuracy (Srivastava et alii, 2019). However, there is no necessarily one-to-one projection between the empirical reality and its representation in a database.

Box 1. Data Quality Tools

Alongside the preventive approach to data quality described in this article and based on the ATMS, a curative approach exists. This is intended for semi-automatic improvement of the quality of data when it enters the system or is already present in a pre-existing database or an ATMS. Most often, the curative approach will use free or commercial tools developed by a third party. Typically, these tools cover one to three of these broad families of functions:

- profiling: qualitatively and quantitatively analysing data to assess its quality and often to uncover unexpected problems. Example: length distribution of values in a column, type inference, verification or discovery of functional dependencies;

- standardization: ensuring the data conform to a standard defined with the project owner or to an existing repository, which can be provided with the tool. Example: cleaning and standardization of the display of telephone numbers, correction and enrichment of postal addresses;

- comparison and duplicate elimination: detecting duplicates and inconsistencies in records within a dataset or between several datasets (potentially from separate databases, e.g. for integration). The comparison is based on discriminant columns and error-tolerant algorithms (edit distance measurement, phonetic footprint comparison, etc.), determined with the project owner, who provides their knowledge of the area. Here, the most advanced tools make it possible for the relevant original records to be retained and linked without overwriting them, as well as making it possible to build a record that represents each cluster thus identified. This record will then be the “surviving record”, used to eliminate duplicates from the datasets if necessary.

These tools are typically used in batch mode, i.e. by the deferred targeting of one or more already existing data sets. However, some also allow for further upstream intervention, setting out these functions in the form of an API* that the application can call on a case-by-case basis as the data enter the system. This method of working makes it possible to standardize or eliminate duplicates from the data before entering it into the database and even, if necessary, to make this entry conditional on the success of the operations that precede it. The tool thus effectively implements a data firewall in addition to the anomaly detection system already implemented by the application.

* Application Programming Interface

Figure 1. Two Interdependent Approaches to Assess and Improve Data Quality

When studying anomalies improves data quality

Aside from the obvious benefit for data quality, the study of anomalies is important because of the high percentage of anomalies that structurally affect information systems: up to 10% according to (Ouvrir dans un nouvel ongletBoydens, 2012) and other sources (Van Der Vlist, 2011). However, when the issues (social, financial, medical, etc.) so require, these anomalies must be examined semi-automatically, or even manually, which is often slow and tedious.

Where do anomalies come from, what is their typology and, accordingly, how can we manage them the best way? In order to answer these questions, we must first return to the notion of data as approached here and which was recently studied from the point of view of statisticians (Rivière, 2020).

Deterministic and empirical data

In the world of databases (Hainaut, 2018), a piece of data is a triplet (t, d, v) composed of the following elements:

- a title (t), referring to a concept (an administrative activity category, for example);

- a definition domain (d), composed of formal assertions specifying the set of values allowed in databases for this concept (a controlled list of alphabetical values of maximum length l, for example), possibly supplemented by area rules found in the application code;

- and finally, a value (v) at an instant i (the chemicals sector, for example).

Thus, a distinction is made between deterministic data from empirical data (Boydens, 1999). The former are characterized by the fact that a theory is available at all times so that it is possible to decide whether a value v is correct or not. This is the case for a simple algebraic operation on an object deterministic in itself, such as the sum of values for a given numerical field in a database at a given instant i. Since the rules of algebra do not change over time, and neither does the object being evaluated, one can know at any time whether the result of such a sum is correct or not. Indeed, we have a stable reference frame to be used for that purpose.

By contrast, regarding empirical data, which are subject to the human experience, the rule changes over time alongside the interpretation of the values it allows to be understood. This is the case, for example, in the medical field (where theory evolves as observations are made on patients suffering from a pathology, as shown by current research on the coronavirus), as well as in the legal and administrative fields, where the interpretation of legal concepts changes with the continuous evolution of the reality being processed and with that of case law. How can we evaluate their validity without any absolute reference for this purpose?

Identifying and interpreting the evolution of the observed reality

Empirical data are like “moving” concepts at the heart of databases. In other words, the concepts meaning evolves with the interpretation of the values they allow to be understood, in the absence of an absolute and stable reference frame.

If we deepen this epistemological finding, which seems to have no operational outcome, it is nevertheless possible to build a widely applicable method for assessing and improving the quality of such information. By now, the question is not only “are the data correct?”, but above all “how are the data built up over time?”.

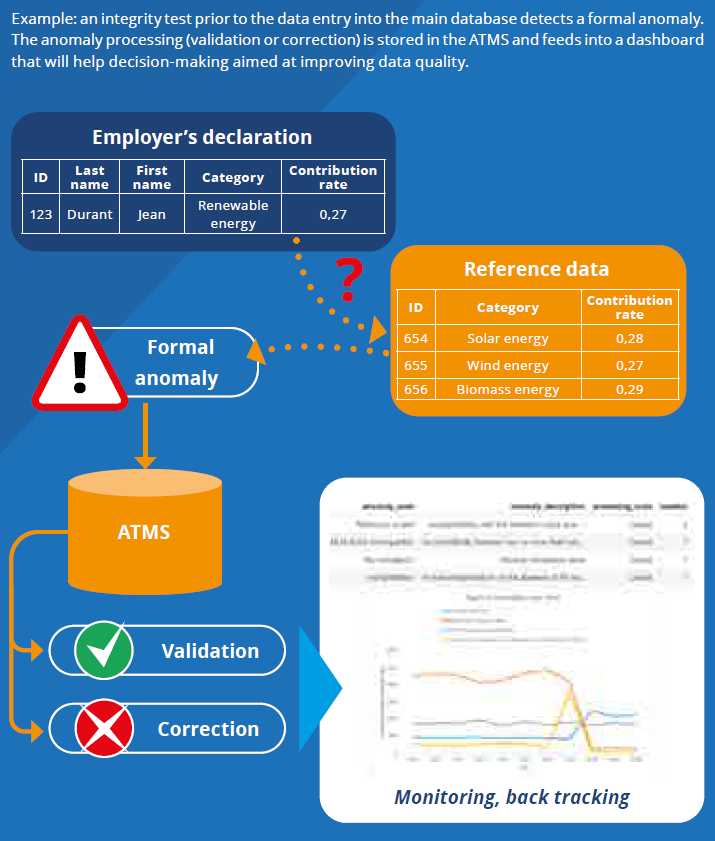

Thus, with globalization, new cases not initially foreseen in the reference tables and in the legislation may arise at national level. This can happen, for example, in the field of energy activity: geothermal energy production varies greatly around the world, and some foreign companies may therefore use a classification of their operating units (“renewable energy”) that is less precise than the one potentially required, after examination, by the legislation of the country of operation (“geothermal energy”), a category which, in our example, is not yet taken into account in the reference table, which will have to be adapted, as explained below (Figure 2).

In this case, it is not possible to check whether the database values are correct or not in a deterministic way. Indeed, when an inconsistency happens between such a value entered in the database and the reference tables used to test its validity, it may be essential, when the issues are strategic, to carry out a manual verification, by contacting the citizen or company concerned, for example. Additionally, such an intervention may often be used if the expected category in the reference file for a given employer does not correspond to the declared category, as the employer may have changed category since registration without this being recorded (because the employer did not report it, for example).

Among others, this is one of the areas where the interest of an ATMS lies, in recording and archiving anomalies and transactions, allowing continuous monitoring of these and possible subsequent modification of the definition domain to adapt it to a newly observed reality.

Let’s illustrate this mechanism (Figure 2) with another concrete example. In 2005, the catastrophe of Hurricane Katrina killed more than 1,800 people in the USA. The measuring instruments to alert citizens to leave the area were in place. However, in retrospect, we realized that the databases that fed them were not designed to take into account the evolution of certain phenomena which, although underestimated at the time, turned out to be decisive: these were the rise in water levels in the oceans as a result of global warming, as well as over-construction, which no longer allows for the rapid flow of water in the soil. Therefore, the evacuation of the population was far too late. These changes to reality are still at work today in the hydrological and climatic fields (Ouvrir dans un nouvel ongletBoydens, Hamiti and Van Eeckhout, 2020).

Figure 2. Violation of the “Closed World Assumption” in an Empirical Domain

Anomaly typology trial

A typology of anomalies then emerges, according to their potential cause and how to look at them:

- definite formal error due to human intervention during the update (in the case of a mandatory field not completed, for example);

- presumed formal errors: presumed duplicates (Figure 1) e.g. due to redundant upstream processes or inconsistency with a reference table which is not known to have been updated;

- formally undetectable error a priori: for example, the omission of an update.

The last two cases may indicate anomalies due to the evolution over time of the empirical domain represented and emerging new concepts not taken into account (Figure 2).

Depending on what is needed, it will be decided to consider these anomalies as:

- blocking: they are rejected from the database in accordance with the closed world assumption mentioned above;

- non-blocking: the values are still integrated in varying ways within the information system with

the corresponding record, for two sets of reasons:

- rejecting them from the system would slow down the area process (e.g. collecting social security contributions) and they are not considered “strategic” (see above);

- taking them into consideration in the information system is essential, as they are considered strategic and are linked to empirical data, the definition of which is potentially evolving. Beyond a certain threshold to be evaluated by the specialists in the field, their processing requires human interpretation, as they may indicate the emergence of phenomena that will be important to take into account in the information system (Figure 2), through version management. Moreover, they potentially originate in the flows feeding the database, a problem which, once identified, can be structurally resolved, as we will see later with back tracking.

The decision to identify “non-blocking” empirical anomalies is sensitive because it is based on predictive knowledge of the realities processed at a given time, which is itself an evolving element likely to be subjected to concerted adaptation within the information system. This brings us back to the epistemological issue of the “hermeneutic loop” (Boydens, 1999; Ouvrir dans un nouvel onglet2012).

How can “non-blocking anomalies” and their processing be taken into account without affecting either the performance or the integrity of data in production?

With the ATMS, or Anomalies and Transactions Management System, we switch from a “closed world assumption” to an “open world hypothesis” under automated controls.

Three time scales in constant interaction

The norm evolution, changes made within the databases, and the movement of observable “phenomena” in the field are interrelated but asynchronous. Depending on their nature, they operate within different time scales.

By applying the historian Fernand Braudel’s notion of “layered time scales” (Braudel, 1949) to the study of administrative information systems with the aim of improving their quality, we can differentiate:

- “the long-term” of the norms (legal and more broadly empirical), whose rate is relatively slower (thus, in the area of social security in Belgium, legislative amendments requiring a new version of the system take place quarterly);

- “the medium term” for the relatively faster management of databases, because of technological developments among other things;

- “the short term” for the observable reality, that of citizens or companies accountable to the administration, which is constantly evolving (Boydens, 1999).

Therefore, Fernand Braudel’s concept of “layered time scales”, is a construction allowing the identification of a hierarchy between several interactive sequences of change. This approach can be supplemented by the work of the German philosopher Norbert Elias and his notion of “evolutionary continuum” (Elias, 1986). Indeed, interactions between time scales are neither deterministic nor unidirectional, as Braudel’s model alone would suggest, in which the slowest sequences determine the fastest ones. Let’s illustrate these interactions with a concrete example.

In 1986, a team of British scientists, specializing in globe studies, reported falling ozone levels in the stratosphere. Based on this observation, NASA researchers re-examined their globally distributed stratospheric databases; they discovered that the phenomenon of declining ozone levels had remained hidden for a decade because the corresponding low values were systematically considered as measurement errors. Indeed, the scientific theory of the time, modelled in their databases, didn’t allow them to conceive that such values could be valid. Subsequently, the definition domain of the database was adapted in order to consider valid the low rates that were previously in an anomalous state (Boydens, 1999). Today, such phenomena continue to evolve.

From a dynamic point of view, an ideal database should therefore match the rate of its updates to the – unpredictable – distribution of changes to the reality it covers in “layered time scales”. On top of what looks to be a challenge is the need, again revealed afterwards, to integrate unforeseen observations, which are prohibited, in principle, by the closed world assumption and are revealed in particular through the anomalies mentioned above.

Anomalies and Transactions Management System: a functional overview

The ATMS or Anomalies and Transactions Management System helps to identify the emergence and increases in “validations” of anomalies deemed strategic during the manual processing phase. A validation operation means that, after examination, an officer considered that the anomaly actually corresponds to a relevant value. The operator can then “force” the system to accept the value without affecting the integrity of the main database; the system should especially include a version management system. Depending on their access rights, officers have access to both the main database and the ATMS: they can therefore have access to all data at any time, whether or not they are in potential anomaly status, regardless of their processing stage (correction, validation, etc.) (Figure 3).

If the rate of such anomaly validations is high and recurrent, or if the validated anomaly is strategic, it is possible that the database definition domain itself is no longer relevant. In principle, the approach focuses on systematic cases, but it can also cover less frequent cases involving types of anomalies that are sensitive for the area (the emergence of a rare pathology, for example).

An algorithm can then send a “signal” to the database managers so that they examine whether or not is required a structural change to its definition domain or even a revision of the corresponding standard (legislation, theory, etc.). The fluctuations in environmental or administrative data illustrated above are examples of where this mechanism is applied. In addition, their processing history must be kept (the same anomaly may be corrected or validated several times following field inspections or interpretation of regulations).

Without such intervention, the gap between the database and reality would widen. Indeed, if the scheme is not adapted, anomalies linked to these cases will continue to grow, requiring a potentially burdensome manual review that is likely to slow down the files processing and affects the quality of data with financial or social impacts.

Upstream, the ATMS helps to improve the quality of the “source” databases and provides various indicators on the status of the processing of anomalies to those involved in the management of the information system (project manager and project owner). For example, it allows to:

- identify “peaks” of anomalies, corrections and validations (which may in turn lead to a restructuring of the database system);

- identify anomalies that would never be processed (neither corrected nor validated);

- determine how long is needed for the database to stabilize, as specified anomalies are processed, in accordance with needs, and when it is most appropriate to take a snapshot of it for further use.

Some of these indicators could also be useful to better exploit administrative sources for statistical purposes. To support the back tracking, the ATMS is an even more powerful instrument for improving data quality, as part of a preventive approach (Figure 1).

The back tracking method is widely applicable and can be used for any application field. Thomas Redman initiated it, under the name data tracking.

Figure 3. The Data Flow between the Application, the Main Database and the ATMS

Thomas Redman’s data tracking

The data tracking proposed by Thomas Redman of AT&T Labs in the USA (Redman, 1996) aims to quantitatively assess the formal validity of the values entered into a database and to structurally improve its processing (Redman explicitly rules out questions of data interpretation, which he considers to be too complex).

According to Redman, a database is similar to a lake. Instead of occasionally cleaning the lake bottom that is continuously fed by external flows and currents (as advocated by data cleansing, an automatic correction method), Redman proposes, on the basis of a random sample of data taken at the input point (upstream) of the information system, methodically analysing the processes and flows that allow the data to be assembled. The end goal is to determine the causes of the identified formal errors in order to structurally fix them at source (e.g. bugs or programming errors).

The operation is based on the principle that a small number of flows, processes or practices are responsible for a large percentage of formal errors. The approach refers to the Pareto principle, also known as the “80/20 principle”. Therefore, it is based on the assumption that a significant proportion of problem cases (about 80%) are provoked by about 20% of the possible causes.

Back tracking: from orthogonality to interaction

During the 2000s, the large-scale application of Redman’s method (Boydens, 2010) led to an original method called back tracking (Ouvrir dans un nouvel ongletBoydens, 2018). Still based on Redman’s Pareto principle, back tracking improves upon his method in relation to five important aspects:

- the database model is extended and linked to a history of anomalies and their processing according to the principles outlined above;

- continuous monitoring of the cases deemed most strategic is implemented from the ATMS, in order to make the quality management of the database easier. The monitoring of the anomalies processing makes it possible to detect, in highly evolving application fields, the emergence of new observable phenomena requiring a specific adaptation of the database definition domain, or even of the associated norms, in order to reduce the number of fictitious anomalies to be processed;

- the cases selected sample is not random as in Redman’s case, given that, in principle, we have knowledge of all the data deemed problematic (via the comprehensive history of anomalies and their processing). The approach allows a more precise and representative selection of cases to be investigated from the outset, reducing the inevitable margin of error of a random sample;

- beyond formal errors (which are deterministic and detectable via an algorithm, the only cases taken into account by Redman), questions are also addressed based on data interpretation as the legislation (or any empirical theory used) and the realities understood evolve;

- it is back tracking: in conjunction with the database suppliers, we start from the final situation (main database and ATMS) and work backwards, step by step, to each source and process that enabled its development, until we identify the causes of problem cases. The aim is to avoid processing unnecessary data or flows and to work more economically, thus increasing the benefits of the operation. Indeed, the search for the structural origins of anomalies ends as soon as all their causes have been detected, by type, without all the flows being unnecessarily examined (in the case of Redman’s data tracking, all the flows must be examined, which is a waste of time, as these can include dozens or even hundreds of processes).

Therefore, the back tracking operation is based on a prior monitoring of anomalies and transactions, which is set up after the specification of strategic quality indicators. It is then possible to identify, within the processes and data flows, in partnership with the information provider and the database manager, the elements at the source of the production of a large number of systematic anomalies or anomalies deemed to be strategic: inappropriate processing of certain data sources, emergence of new situations not yet taken into account in the database definition domain, inadequate interpretation of legislation, poorly documented concept, programming errors, etc. On this basis, a diagnosis and sustainable and structural corrective actions can be taken (correction of formal code in programmes, restructuring of processes, adaptation of the interpretation of a law, clarification of documentation, etc.).

In the field of social security in Belgium (in this case, based on the DmfA database mentioned above), the “full-scale” tests carried out in conjunction with developers, specialists in the application field as well as senders of the information were conclusive. The benefits of the method were repeatedly shown in the 2000s (structural reduction in the number of anomalies of 50% to 80%, time saving due to a reduction in the tedious intellectual work of verification, better interpretation of the law, faster financial collection and redistribution, etc.). Given its widely applicable nature, the method was enshrined in Belgian legislation in the form of a binding Royal Decree in 2017 (Ouvrir dans un nouvel ongletBoydens, 2018). This legislation falls within the framework of the “quality barometers” applied to social security. If the effects of the method are lasting and structural, it must be applied on a recurrent basis in order to take into account possible new phenomena, although requiring gradually decreasing effort over time. It is based on a rigorously documented organization, procedures and information system (Boydens, 2010).

The ATMS mechanism that supported the back tracking operations has so far been deployed on a large scale via a hierarchical Database Management System (DBMS) and specific outsourced code associated with a generic rules engine. Now, a new generic ATMS development is applicable to any relational DBMS and to the current technologies.

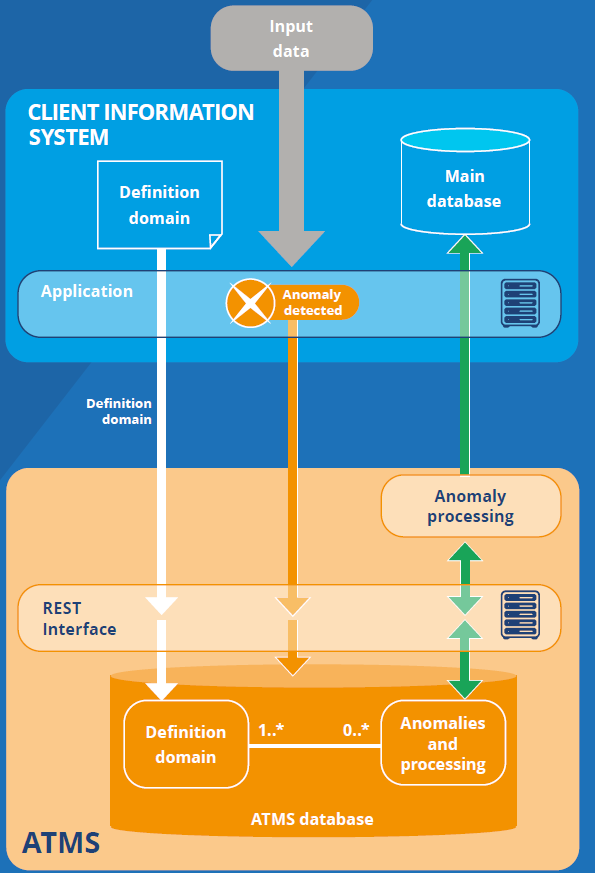

The relational ATMS: a dynamic between data in production and anomaly management

The Smals Data Quality Skills Centre (Centre de Compétence en Qualité de Données), which is based on a synergy between the Databases department and the Research department, undertook the implementation of a prototype applied to relational DBMSs and related standards. The prototype is based on the Crossroads Bank for Enterprises’s complete open data version, which is the Belgian equivalent of the SIRENE business register in France.

In this new model, the main database and the ATMS are separated, which requires agreement on the routing of data between both systems and the definition of principles for storing the anomalies and associated transactions.

The main database and the ATMS: both hemispheres of the world represented

One of the key traits of the specifications of the relational ATMS is the separation made between the main database on the one hand, and the database of anomalies and their processing on the other. Figure 4 illustrates this separation, which is based on the following two principles:

- the main database represents the concepts of the domain of activity that it serves. As such, it is modelled to process data that strictly respect the definition domain, under the closed world assumption (see above);

- it is possible to factor the recording and processing of anomalous data into a dedicated system separate from the main database if the latter is designed to be expandable.

Beyond satisfying theoretical design hypotheses, the separation of the main database and the ATMS opens the door to a number of significant benefits:

- the design of the main database is simplified, as it does not need to take into account anomalies or their processing;

- the content of the main database remains compliant with the definition domain at all times, without preventing its evolution;

- anomaly management can be the subject of standardized processes and tools that can be reused across a team, a project or an entire organization;

- the existence of a dedicated ATMS encourages greater consideration of the definition domain at the earliest possible stage in the design of the IT system, which should take responsibility for anomaly detection and data routing accordingly.

Figure 4. Dynamic Separation between the Main Database and the ATMS

Data routing

When data enter the information system, their compliance with the definition domain (see above) is verified. If non-blocking anomalies are detected, the corresponding data are sent to the ATMS (Figure 3). It is only after being subjected to verification and intellectual processing, automatically logged, that the data will be integrated into the main database. In a case of validation, as discussed above, the definition domain may need to be adapted before records can be accepted into the main database.

This automatic routing does not exclude by any means the possibility of an officer manually triggering an anomaly on data that conform to the definition domain and are already present in the main database. This could be justified, for example, by a field inspection which reveals that a record is fraudulent or obsolete.

The flow of data between the main database and the ATMS must be fluid and standardized; it is therefore implemented in automated procedures, the principle of which has already been illustrated in Figure 3. The bidirectional nature of this flow requires the ability to preserve or reconstruct the original state of the information from the ATMS. To that end, two constituent parts are used to store the definition domain and the associated anomalies and metadata respectively (Figure 4); we will not detail this function here but more references are available online (Ouvrir dans un nouvel ongletBoydens, Hamiti and Van Eeckhout, 2020).

Implementation of the prototype and outlook

The prototype fully implements the database of the ATMS. This was developed and tested repeatedly by exposing it early on to an information system simulated by a rudimentary application and a realistic main database, the open data of the Belgian Business Register (Répertoire des entreprises belges) (Box 2). In this context, four usage scenarios have been implemented by way of examples:

- correction of a simple anomalous value;

- correction of an anomaly triggered by the incompatibility of input data with the record already existing in the main database;

- batch validation of anomalies: the processing of an anomaly (in this case, validation) is automatically propagated to a series of other anomalies designated as similar by the officer;

- finally, the creation of several views making it possible to track the anomalies and their processing over time at a more or less aggregated level. This fourth scenario is essential in guiding the back tracking operations as described above.

As the prototype was technically successful and aroused the interest of the contracting authority, a pilot project is underway. This initiative can later be applied in full scale to the databases of the Belgian administration. It is also widely applicable and can be used for any information system. There are several possible scenarios for the implementation of an ATMS. Ideally, this is taken into consideration from the design stage of the information system, together with the main database and data quality tools (Box 1), if available. A re-engineering project is also an appropriate time to integrate an ATMS into an existing system.

The recurrent contributions of back tracking mentioned above, a method that must be supported by an ATMS, applied to the DmfA database constitute a precedent that strongly encourages the widespread application of this prototype adapted to recent technologies: structural reduction in the number of anomalies by 50% to 80%, time saving thanks to a reduction in the tedious intellectual work of verification, a better interpretation of the norm, a faster financial collection and redistribution; more generally speaking, improvement in the quality of any empirical database, establishment of a partnership between the database managers and the suppliers of information, etc. As seen previously, the service can benefit both the managers of administrative databases, the quality of which is improved, and statisticians who want to use them for other purposes.

Box 2. Some technical details on the ATMS

The prototype described in this article was developed based on the PostgreSQL DBMS; it is nevertheless transposable to any system allowing the manipulation of data in JSON format. This “system-less” notation allows the widest variety of anomalies to be stored within a traditional relational table and exchanged as messages between the main information system and the ATMS. The processing that produces and consumes these JSON messages is implemented on both sides as stored procedures that can be accessed by querying the databases directly; in practice, in a production project, this logic would typically be exposed via REST interfaces.

In this prototype, the volume of the main database is about 4.4 gibibits (Gibit) giving a total of 30.2 million records distributed mainly over 9 tables. Running the first three scenarios in the most demanding way possible (looped operation of 100,000 iterations without any explicit optimization*) allowed to observe the following:

- a constant execution time, often in the order of a thousandth of a second or less, for most computational and light insertion operations (e.g. generating a complete anomaly creation message, allocation of an anomaly for processing by an officer and marking an anomaly as processed);

- a linear increase in execution time for larger writing operations (recording the corrected version of an anomaly);

- a linear increase in the consumption of storage space.

*E.g. PostgreSQL makes it possible to explicitly trigger operations like “VACUUM ANALYSE”

in order to optimize the storage and execution plans for the queries. Other relational

DBMSs offer similar functions.

Legal references

Ouvrir dans un nouvel ongletArrêté royal du 2 février 2017 modifiant le chapitre IV de l’arrêté royal du 28 novembre 1969 pris en exécution de la loi du 27 juin 1969 révisant l’arrêté-loi du 28 décembre 1944 concernant la sécurité sociale des travailleurs. In: Moniteur belge. [online]. [Accessed 31 May 2021].

Paru le :02/10/2023

By anomaly, we mean here a formal error (e.g. a mandatory value not completed), but also a presumed error requiring human interpretation (e.g. presumed duplication between highly similar records, emergence of a new activity category not taken into account in the reference tables, etc.). A typology of anomalies is proposed hereinafter.

[Editor’s note] This point is regularly raised in the articles of the Courrier des statistiques, see also the article by Christian Sureau and Richard Merlen in this issue.

Salaries and working hours database (Loon en ArbeidsTijdsGegevensbank).

Multifunctional declaration (Multifunctionele Aangifte).

All of this work is carried out in accordance with the European GDRP (General Data Protection Regulation).

See (Bens and Schukraft, 2019). By way of examples, the authors cite monetary quantities, such as income and turnover (p. 15) or the need for a unique identifier for individuals and companies, regardless of a given use (taxation, social security, trade, etc.) (pp. 14-15).

By strategic issues, we mean fundamental issues with regard to the area of application and the aims to reach: in the field of social security, for example, it can be about calculating the contributions due by an employer (the rates vary according to the category of activity) or of the worker’s social security rights (access to health care, right to unemployment benefits, etc.), which may depend, among other things, on the validity of the employer’s identifying data and the interpretation of the associated anomalies, which are also “strategic”.

These cases can only be identified indirectly, via lateral means, including the ATMS (see below).

The hermeneutic approach consists in considering empirical phenomena in terms of interactions based on a more general conceptual framework built to give them meaning. However, any interpretative approach raises a paradox: that of the “hermeneutic circle” (Aron, 1969). Each observation only makes sense when confronted with a whole, with a “pre-understanding”. However, semantics of the whole are based on the interpretation of its constituent elements. The construction process required by hermeneutics is, by nature, always unfinished.

Conceptually, logically and physically, ATMS is a database. The term “system” refers to a broader framework, including processes, applications and the team managing the database and processing anomalies, as well as the information providers.

In database modelling, application field means the segment of observable reality that we are trying to represent through the database.

As mentioned above, anomalies are specified at a given time by reference to a definition domain: missing value, inconsistent value compared to another, etc. These types of anomalies are likely to evolve over time, if necessary, via a version management of the definition domain.

This is the contractor, project owner or any manager who has control of the database at the time of identification of the problematic elements.

See the legal references at the end of the article.

In concrete terms, beyond a certain threshold of anomalies set by the administration, suppliers of social declarations are obliged to reduce the number of anomalies within a given period, by participating in a back tracking operation.

Smals is an IT company founded in 1939 that provides services to the Belgian federal and regional administrations.

as a corollary, the re-synchronisation of the status of the two databases in the event of a disaster must be thought out in advance. Various more or less sophisticated options are possible, ranging from simply shutting down one of the two systems if the other stops responding, to using a message queue within a dedicated third-party system.

The complete state of the information system is therefore composed of the union - whether inclusive or exclusive, with intersection not being accepted in any event - between valid data and unprocessed anomalies, distributed respectively in the main database, on the one hand, and in the ATMS, on the other.

The most frequent types of anomalies, anomalies validated by whom and when, anomalies not processed, etc.

If the original system already has some form of anomaly management in place, however, transposing this content to the ATMS may represent some effort depending on the degree to which anomalies and their processing metadata are merged and broken down in the system.

Pour en savoir plus

AGENCE BELGA, 2018. Ouvrir dans un nouvel ongletDes lacunes dans la base de données belge sur les terroristes. In: La Libre Belgique. [online]. 1 March 2018. [Accessed 31 May 2021].

ARON, Raymond, 1969. La philosophie critique de l’histoire. 1969. Édition Librairie philosophique J. Vrin. Collection Points – Sciences humaines. ISBN 2560848158182.

BADE, David, 2011. It's about Time!: Temporal Aspects of Metadata Management in the Work of Isabelle Boydens. In: Cataloging & Classification Quarterly (The International Observer). 16 May 2011. Volume 49, n°4, pp. 328-338.

BATINI, Carlo et SCANNAPIECO, Monica, 2016. Data and Information Quality. Dimensions, Principles and Techniques. Springer, New York. ISBN 978-3-319-24106-7.

BENS, Arno et SCHUKRAFT, Stefan, 2019. Modernisation des registres administratifs en Allemagne – Développements actuels et enjeux pour la statistique publique. In: Courrier des statistiques. [online]. 27 June 2019. Insee. N°N2, pp. 10-20. [Accessed 31 May 2021].

BOYDENS, Isabelle, 1999. Informatique, normes et temps. Bruylant, Bruxelles. ISBN 2-8027-1268-3.

BOYDENS, Isabelle, 2010. Strategic Issues Relating to Data Quality for E-government: Learning from an Approach Adopted in Belgium. In: Practical Studies in E-Government. Best Practices from Around the World. 17 November 2010. Springer. New York. pp. 113-130 (chapitre 7). ISBN 978-1489981899.

BOYDENS, Isabelle, 2012. Ouvrir dans un nouvel ongletL’océan des données et le canal des normes. In: La normalisation : principes, histoire, évolutions et perspectives. [online]. Juillet 2012. Annales des Mines, Responsabilité et Environnement. Édition FFE. Paris. N°2012/3 (67), pp. 22-29. [Accessed 31 May 2021].

BOYDENS, Isabelle, 2018. Ouvrir dans un nouvel ongletData Quality & « back tracking » : depuis les premières expérimentations à la parution d’un Arrêté Royal. [online]. 14 May 2018. Smals Research. [Accessed 31 May 2021].

BOYDENS, Isabelle, 2021. Qualité de l’information et des documents numériques. Cours dispensé à l’Université libre de Bruxelles, Master en sciences et technologies de l’information et de la communication.

BOYDENS, Isabelle, HAMITI, Gani et VAN EECKHOUT, Rudy, 2020. Ouvrir dans un nouvel ongletData Quality: “Anomalies & Transactions Management System” (ATMS), prototype and “work in progress”. [online]. 8 December 2020. Smals Research. [Accessed 31 May 2021].

BRAUDEL, Fernand, 1949. La Méditerranée et le monde méditerranéen à l’époque de Philippe II. Armand Colin, Paris.

BYRNES, Nanette, 2016. Ouvrir dans un nouvel ongletWhy we should expect algorithms to be biased. In: MIT Technology Review. [online]. 24 June 2016. [Accessed 31 May 2021].

CHAPUIS, Nicolas, 2018. Des fichiers de police mal organisés et trop complexes. In: Le Monde. 17 October 2018.

DIERICKX, Laurence, 2019. Ouvrir dans un nouvel ongletWhy News Automation Fails. [online]. February 2019. Computation & Journalism Symposium, Miami, USA. [Accessed 31 May 2021].

ELIAS, Norbert, 1986. Du temps. Édition Fayard. Paris. ISBN 978-2818503454.

HAINAUT, Jean-Luc, 2018. Bases de données – Concepts, utilisation et développement. Octobre 2018. Édition Dunod, Paris, collection InfoSup. 4e édition. ISBN 978-2100790685.

HAMITI, Gani, 2019. Ouvrir dans un nouvel ongletData Quality Tools: concepts and practical lessons from a vast operational environment. [online]. 13 March 2019. Université libre de Bruxelles. Cours-conférence. [Accessed 31 May 2021].

HAND, David J., 2018. Ouvrir dans un nouvel ongletStatistical challenges of administrative and transaction data. In: Journal of the Royal Statistical Society. [online]. Series A, 181, Part 3, pp. 555-605. [Accessed 31 May 2021].

HUMBERT-BOTTIN, Élisabeth, 2018. La déclaration sociale nominative. Nouvelle référence pour les échanges de données sociales des entreprises vers les administrations. In: Courrier des statistiques. [online]. 6 December 2018. Insee. N°N1, pp. 25-34. [Accessed 31 May 2021].

MADNICK, Stuart E., WANG, Richard Y., LEE, Yang W. et ZHU, Hongwei, 2009. Ouvrir dans un nouvel ongletOverview and Framework for Data and Information Quality Research. In: Journal of Data and Information Quality. [online]. 1 June 2009. Volume 1, n°1, pp 1–22. [Accessed 31 May 2021].

RADIO, Eric, 2014. Information Continuity: A Temporal Approach to Assessing Metadata and Organizational Quality in an Institutional Repository. In: Metadata and Semantics Research. 27-29 novembre 2014. 8th Research Conference, MTSR 2014, Karlsruhe. Springer, Cham. Communications in Computer and Information Science, vol 478. ISBN 978-3-319-13673-8.

REDMAN, Thomas C., 1996. Data Quality for the Information Age. Artech House Computer Science Library. ISBN 978-0890068830.

RENNE, Catherine, 2018. Bien comprendre la déclaration sociale nominative pour mieux mesurer. In: Courrier des statistiques. [online]. 6 December 2018. Insee. N°N1, pp. 35-44. [Accessed 31 May 2021].

RIVIÈRE, Pascal, 2018. Utiliser les déclarations administratives à des fins statistiques. In: Courrier des statistiques. [online]. 6 December 2018. Insee. N°N1, pp. 14-24. [Accessed 31 May 2021].

RIVIÈRE, Pascal, 2020. Qu’est-ce qu’une donnée ? Impact des données externes sur la statistique publique. In: Courrier des statistiques. [online]. 31 December 2020. N°N5. [Accessed 31 May 2021].

SRIVASTAVA, Divesh, SCANNAPIECO, Monica et REDMAN, Thomas C., 2019. Ensuring High-Quality Private Data for Responsible Data Science: Vision and Challenges. In: Journal of Information and Data Quality. 4 January 2019. Volume 1, n°11, pp. 1-9.

VAN DER VLIST, Eric, 2011. Relax NG. May 2011. Édition O’Reilly Media. ISBN 0596004214.