Courrier des statistiques N9 - 2023

Integrating administrative data into a statistical process Industrialising a key phase

Official statistics increasingly rely on external sources, particularly administrative data, to produce statistics. This requires greater industrialisation of the production and integration processes for these data, in order to make them more secure, more traceable and as reproducible as possible.

The aim is to implement a general data integration framework based on an automated approach to structured data delivered by an external producer. This involves setting up a pipeline with checkpoints to ensure that the succession of tasks (renaming, data restructuring, recoding, pseudonymisation, etc.) is carried out correctly and to stop the process as soon as any problems are encountered. In addition, the use of standards and active metadata upstream of this pipeline allows the designer to be as autonomous as possible, making it easier to adapt to changes in external sources.

- Source qualification: a key prerequisite for integration

- What does this involve?

- Integration, a fundamental step in the production of statistics

- Integration: the phase in which statistical surveys produced using administrative data are separated out

- Description of the different stages of the integration process

- Box 1: The ARC module

- Renaming variables: essential for better understanding them

- Restructuring data by statistical unit and linking them

- Pseudonymising the data

- Recoding the values of the variables

- Calculating derived variables

- Incorporating filters

- Establishing the data pipeline

- Box 2 : VTL, a language for validating and transforming data

- Integrating data is good, but with metadata it is even better…

- … with a quality measure performed at the earliest opportunity

- Perspectives

- Box 3. Data transformation: the international framework

Official Statistics produces figures and studies based on surveys, which it manages in a fully autonomous manner, from their design to their dissemination following the collection and non-response processing phases. However, with the abundance of data produced and made available by other bodies, the mass use of external sources and particularly administrative data to produce statistics is increasing rapidly (Lamarche and Lollivier, 2021, Ouvrir dans un nouvel ongletHand 2018). This practice is also growing internationally (Ouvrir dans un nouvel ongletUNECE Integration, statswiki, Cros 2014). As a result, there is now a strong desire to further industrialise the integration of administrative sources in order to improve the security, traceability and reproducibility of this data integration.

It is often the case that administrative data cannot be used directly (Courmont, 2021). Their integration therefore consists of uncoupling data and metadata from their original management universe and linking them to the statistical world (statistical unit, concepts, nomenclature, etc.). In order to do so, future users must make the content of this new source their own.

Where the quality of the administrative data is deemed to be sufficient for the production of reliable statistics (“source qualification”), the first stage in the process is to integrate external information into the statistical information system. As is the case for survey-based data collection, integration is the first step in statistical processing (check, verification, adjustment) enabling the transition from raw data to data that can be disseminated.

The objectives, the precise scope and the various stages of this data integration process are described in this article. The qualification phase is only briefly touched upon here, even though it is essential. Administrative sources may have gaps in coverage (e.g. missing data for Alsace-Lorraine as they were defined after the Concordat) or use concepts far removed from those used by Official Statistics (for example: data collected from electricity meters for the purposes of billing household consumption): these problems must be identified upstream so that they can be addressed as soon as possible.

Source qualification: a key prerequisite for integration

Before integrating a source, it is important to ensure that it is of adequate quality for use for statistical purposes. It is therefore necessary to contact the producer of the data to verify that the source is:

- usable (the constituent data can be restructured to measure statistical concepts);

- complete (without any obvious under-coverage that would prevent its use);

- available within a reasonable time frame;

- documented (presence of metadata).

It should be noted that the UK Office for National Statistics sends statisticians directly to the administrative bodies in order to perform this qualification phase (Fermor-Dunman and Parsons, 2022).

What does this involve?

Administrative data are gathered and structured by administrative bodies to suit their own needs. For example, income tax returns are collected at the tax household level (unit managed by the DGFiP). This concept refers to all of the individuals recorded on a single tax return and does not directly correspond to the concepts of the individual and the household used in statistics. A tax household may comprise one or several individuals and there may be multiple tax households within a single “statistical” household: for example, an unmarried couple counts as two tax households if each partner completes their own tax return. This basic administrative information must therefore be restructured in order to be used for statistical purposes.

During the integration phase, the individual administrative data are transformed into raw individual data suitable for statistical use. Only essential information is selected from external sources in compliance with the principles of minimisation and necessity that statisticians are bound by. Switching from the administrative world to the statistical world involves a change of governance. Statisticians are permitted to modify the data without any need to inform the original producer of this. These data then become statistical data and are therefore subject to statistical confidentiality.

This phase makes it possible to build a coherent set from various different files, or even from several different sources. A very interesting example of this is FIDELI. This file incorporates several tax sources: a source describing the members of the taxable households, a source containing information regarding the income tax of those households, a source containing information on housing tax and another containing information on property tax, each of which may comprise multiple files (more than one hundred per source) for the purposes of obtaining information covering the whole of France.

The integration produces the raw version of the data, which will feed into the remainder of the process. At this stage, it is simply a case of reorganising the information without checking them in any way and certainly not correcting them. However, some traceability is required to guarantee the reproducibility of the creation of this initial database.

Integration is the starting point of the statistical process. It provides a point of reference to which we can return in the event of problems in subsequent statistical processing. By comparing the raw data with the data modified by managers during the “manual and automatic checking and correction” phase, it is possible to judge the effectiveness of the checks put in place. Likewise, by comparing the raw data with the data obtained following automatic adjustments, it is possible to measure the variance introduced by such processing and the increase in quality for various indicators, particularly with regard to the reduction of bias.

Integration, a fundamental step in the production of statistics

If we compare the data life cycles of statistical production based on a survey and statistical production based on an external source, we notice that they differ in the upstream phase, but that they are identical following processing aimed at transforming raw statistical data into data that can be disseminated.

As per the GSBPM (Ouvrir dans un nouvel ongletUNECE, GSPBM), data integration precedes the downstream phases of data processing and analysis, which result in data that can be disseminated (check, manual and automatic correction, validation of individual data, aggregation and validation of aggregated data, dissemination of data and archiving of data). We do not discuss these phases in this article.

This means that if the administrative file contains values that are obviously incorrect (30 February, for example), such values will not be modified during the data integration phase, but corrected with a possible value during the subsequent “check, manual and automatic correction and validation of individual data” phase.

Integration is the first stage of the process. This is also the point at which statistical metadata that will be of use throughout the life cycle of the data are initialised (concepts, description of variables, lists of codes and nomenclatures). This makes it possible to set up metadata-driven processes (see below).

Integration: the phase in which statistical surveys produced using administrative data are separated out

The statistical survey is handled by statisticians from the start and ensures that the questionnaire is designed in such a way that the data collected are in line with statistical concepts and units. As a result, there is no need to transform them following their collection and they can be directly used as raw statistical data.

Conversely, external data must be transformed (creation of statistical units, renaming, etc.) before they can be considered raw statistical data. Some metadata are initialised during this integration phase, such as the definition of variables, the rules for their calculation, their links with statistical concepts, etc.

At INSEE there are statistical processes that link survey data to administrative data in order to produce robust statistics while limiting the statistical burden, namely the time required for respondents to complete the survey. An example of this is the Tax and Social Incomes Survey (enquête Revenus Fiscaux et Sociaux), which merges data from the Labour Force survey (enquête Emploi) with tax and social data, or the structural business statistics process (système d’élaboration des statistiques annuelles d’entreprises – ESANE), which is based on the results of annual statistical sector-based surveys, tax data, and employment data (Déclaration sociale nominative – DSN).

Description of the different stages of the integration process

Administrative data are often linked to public policy, the legal contours of which can change rapidly over time (introduction or withdrawal of a tax or a social benefit, etc.). It is therefore essential that the tools and methods developed during this data reception phase be as transparent and adaptable as possible.

Integration involves a succession of elementary operations written in a declaratory manner, i.e. by specifying the what and not the how, which can easily be “replayed” to obtain a permanent and reusable processing chain. In order to implement this reproducibility and ensure its adaptability, it is essential to make use of standards and provide statisticians with tools that will allow them to work as independently as possible. The VTL language is a solution that allows both of these key requirements to be met. The transformation of administrative data into raw statistical data involves a sequence of basic tasks (similar to function composition in mathematics), which can be broken down into the following six categories:

- renaming the selected variables;

- restructuring data by statistical unit;

- recoding;

- calculating derived variables;

- filtering useful records;

- pseudonymisation.

This order is for information only and may differ from one source to another. In particular, the pseudonymisation and filtering phases may occur at different times within the sequence.

This categorisation is based on the “source hosting service” established by the Statistical Directory of Individuals and Housing (répertoire statistique des individus et des logements – RESIL) program (Ouvrir dans un nouvel ongletDurr et al., 2022), which will take on this role of integrating administrative data into the demographic and social information system using the ARC (accueil réception contrôle – receipt, acceptance, control) tool (Box 1).

Box 1: The ARC module

The ARC (accueil réception contrôle – receipt, acceptance, control) module has been designed as a block of shared infrastructures for all declarations, and is implemented by the employment and activity income information system (Système d’Information sur l’Emploi et les Revenus d’Activité). It has been developed as open source software, which facilitates its shared use. It is now used by other systems within INSEE and even by third parties, most notably by ISTAT (the Italian National Institute of Statistics).

This functional module allows for hosting, checking and transformation of administrative data into raw statistical data, with the possibility of upstream filtering.

The user is able to interactively modify and improve loading parameters (flat file or XML* file), the recognition of standards associated with declarations and the checks applied to data and the transformation of administrative data into statistical data (mapping) over time. Given the very large volumes of data to be processed, this possibility to interactively modify their processing, coupled with possible specification errors, could lead to production risks.

In order to limit this risk, users have access to test areas that are similar to, but separate from, the production area, in which they can test and qualify the new rules specified and measure their impact on the data loaded prior to their live implementation.

To ensure that the ARC is able to receive and check these different file types, three key pieces of information must first be provided to it with regard to the characteristics of the files to be loaded and checked:

- the specific family with which the administrative file is associated: (for example: DSN, individuals, employers, etc.); these families are used to distinguish between the broad types of files to be checked.

- the norm with which the administrative file is associated. The norm is a set of characteristics describing the file (subset of the family), most often valid for a specific period of time and used to calibrate the tests.

- the periodicity of the file received; this provides the application with information regarding the temporality of the files to be checked.

*XML : Extensible Markup Language.

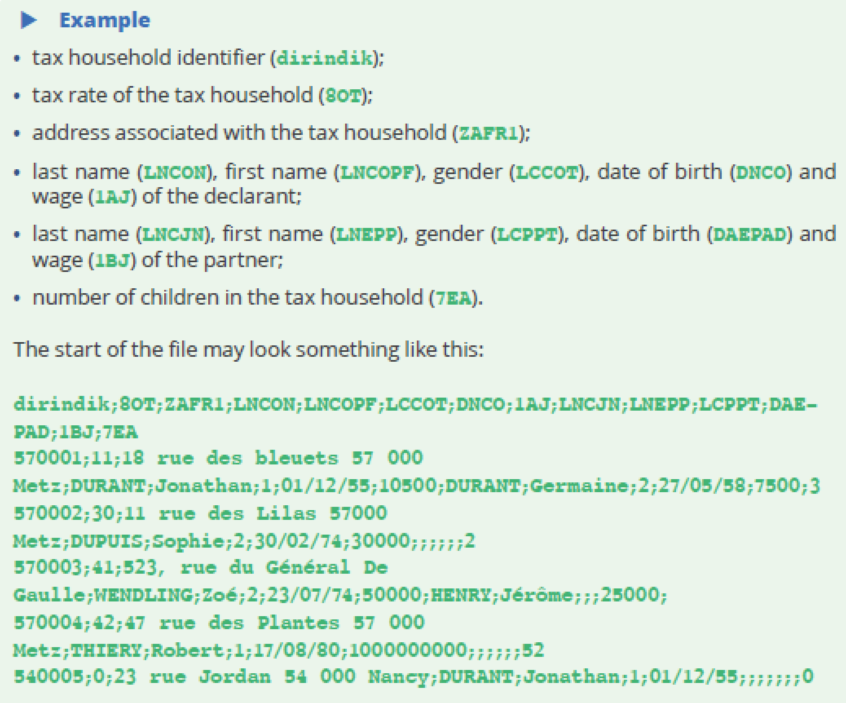

The remainder of this article will be illustrated using a fictitious administrative file used by INSEE by way of example. This example is simplistic, but allows us to take account of the different transformations that are possible and to illustrate the use of a “pipeline” for the purposes of integrating a source.

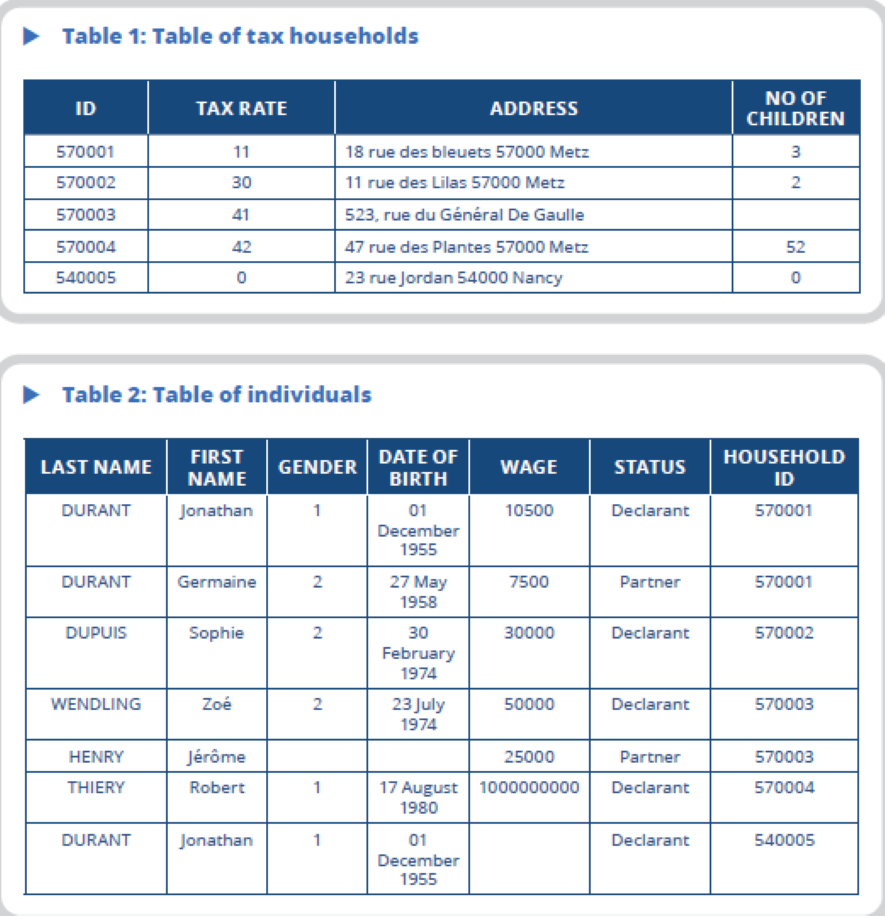

Let’s imagine that the content of the data sent to INSEE by means of a file in CSV format (with “;” as a separator) is as follows:

In our example, an individual’s wage is only allocated to one tax household, but an

individual can be allocated to several tax households, for example if they own a second

home in a different region to that in which they declare their wage: this is the case

for Jonathan Durant in this example.

Renaming variables: essential for better understanding them

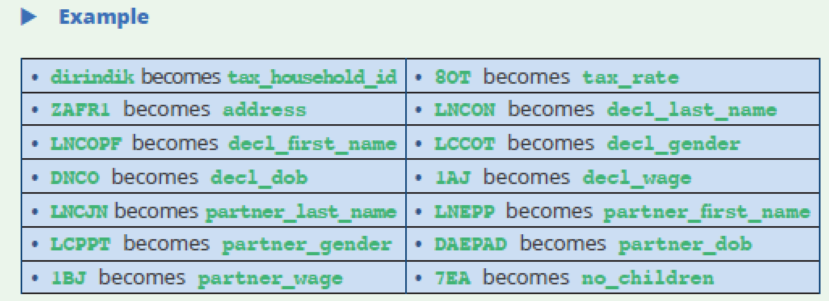

In order to use variables from an administrative source, it is easier when they have a name that can be understood (for example: decl_wage) rather than retaining the name in the original file that corresponds to the name of the box in the tax form (1AJ) in this example.

The names of variables in external files are often linked to the context in which they were collected and they will ideally be renamed at this stage to make them easy for statisticians to use (they will not necessarily have been directly involved in the data integration phase). However, it is not sufficient to simply rename the data to facilitate their use by statisticians. Descriptive metadata must also be captured, to provide a more precise description of the concept, the format of the variables, etc. The new names for the variables will therefore more accurately correspond to the statistical concepts that they cover. Therefore, in our example:

Restructuring data by statistical unit and linking them

Administrative management units (electricity meters, tax households) are generally different from the statistical units that are disseminated (dwellings, households, individuals, companies, etc.). These statistical units must therefore be derived if they are not directly present within the administrative source. This step results in the aggregation of several records or the deletion of records to create a statistical unit. For example, data can be grouped at the “household” level based on individual data, or grouped at the “individual” level based on data regarding employment contracts, etc. This is possible if the data within the external source allow this thanks to the presence of the household identifier in the records of individuals, for example. If such processing requires data external to the source, this operation will take place at a later stage of the process.

Conversely, it may be desirable to break a record within the input file down into several records in the statistical data model (this is the case for individuals within the tax files, which are structured as tax households). It then becomes necessary to explain the links between different statistical units. In the context of tax data, establishing this link between individuals and tax households on the one hand, and tax households and addresses on the other hand, ultimately allows us to create households.

In our example, the file sent contains a “mix” of two types of statistical unit within a single record.

The first step is to create two different tables, one for individuals and the other for tax households, within the “statistical” data model.

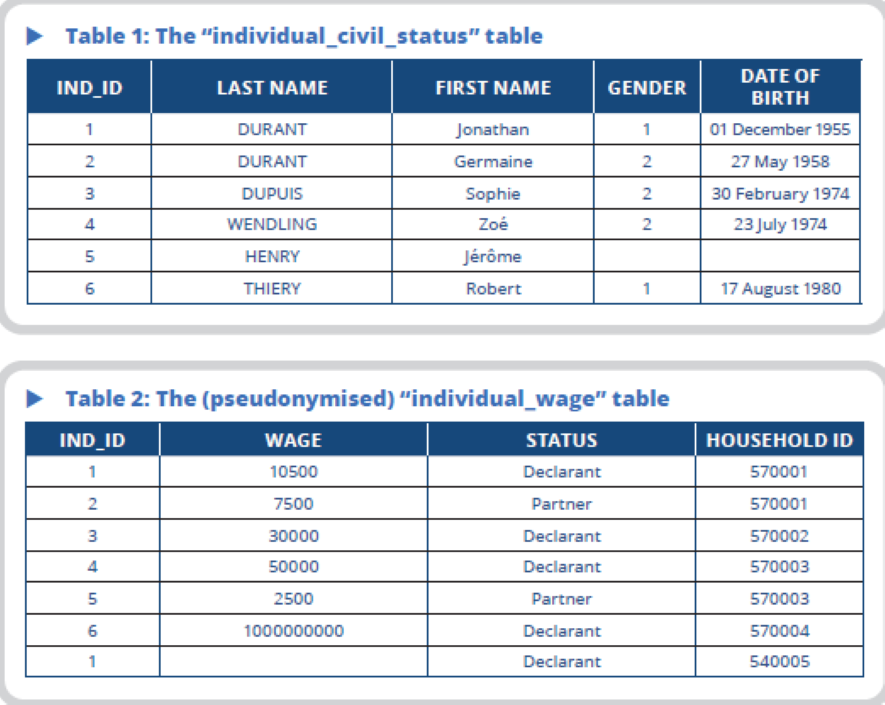

The data are integrated into the following two tables:

The original data can be reconstituted by joining these two tables using the household_id

variable, which is present in both tables. It is a case of restructuring the information

rather than changing it.

Pseudonymising the data

Administrative sources might contain nominative information (social security number, last name, first names, etc.) that are not essential for statistical production; these must be deleted at this stage to comply with data privacy. So we pseudonymise them. In order to separate civil status data (last name, first name, date and place of birth and address) from data useful for compiling statistics (information regarding employment, income, etc.) as early as possible, the source civil status data are matched with an ID of the register in order to replace the civil status data in the integrated data with this ID.

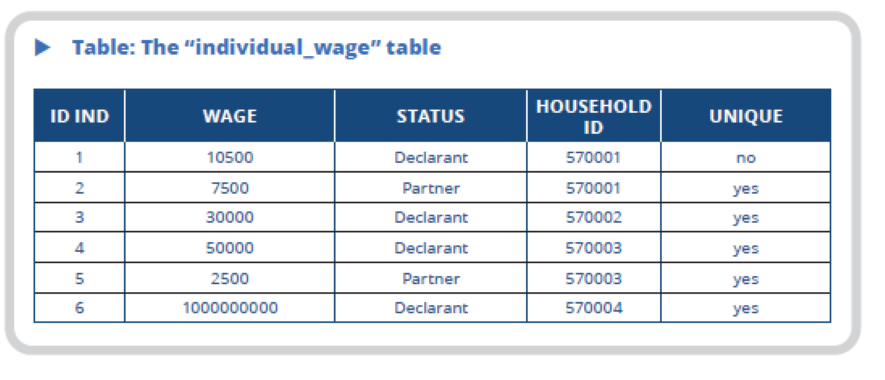

The implementation of such pseudonymisation in our example results in the table of individuals being bisected, with one table called “individual_civil_status”, which contains civil status data, and the second called “individual_wage”, which contains the other variables. The two tables are linked using the variable ind_id, which uniquely identifies an individual based on their last name, first name and date of birth. As can be seen, the “individual_civil_status” table only contains six rows, as Jonathan Durant is duplicated within the file.

Recoding the values of the variables

Administrative sources may use different classifications from those used for statistics. This step makes it possible to conform to the latter classifications, provided that the transformation is fully automated (replacement of “M” with “1” for gender, for example). For records requiring additional human input (codifying the PCS for example), a recovery indicator can be set during integration phase and resulting in the “manual” recoding being pushed back to a later stage in the production process.

Such processing directly concerns the values of one or more of the variables in the input file. It may involve:

- harmonisation of missing or “refuge” values in the event that missing values are processed differently depending on the variables. This will facilitate their identification during the adjustment phase ;

- correction of outliers. This process may be pushed back to subsequent stages in the statistical process, but if it takes place upon integration, it must be possible for it to be completed without human input. In the example, it would be a case of simple processing, such as setting an incorrect date (30 February) as a missing value;

- normalisation of a variable. This may be a case of deleting special characters, changing to upper case letters, etc. Such processing may involve variables useful for identification in order to ensure that the normalisation rules are the same in the file to be identified and the register with which it needs to be compared. This type of processing is carried out for the purposes of allocating the Non-Significant Statistical Code, for example.

Calculating derived variables

Statisticians often use classifications (age group, company category, etc.) for the purposes of their studies. By calculating derived variables, they are able to convert from a year of birth contained within the administrative source to an age group.

This involves the creation of new variables that will be useful in subsequent phases. Examples include the creation of a statistical variable by means of aggregation or by splitting the input variables. Income can be defined as the sum of different items in the tax form, the address can be broken down into several fields, etc.

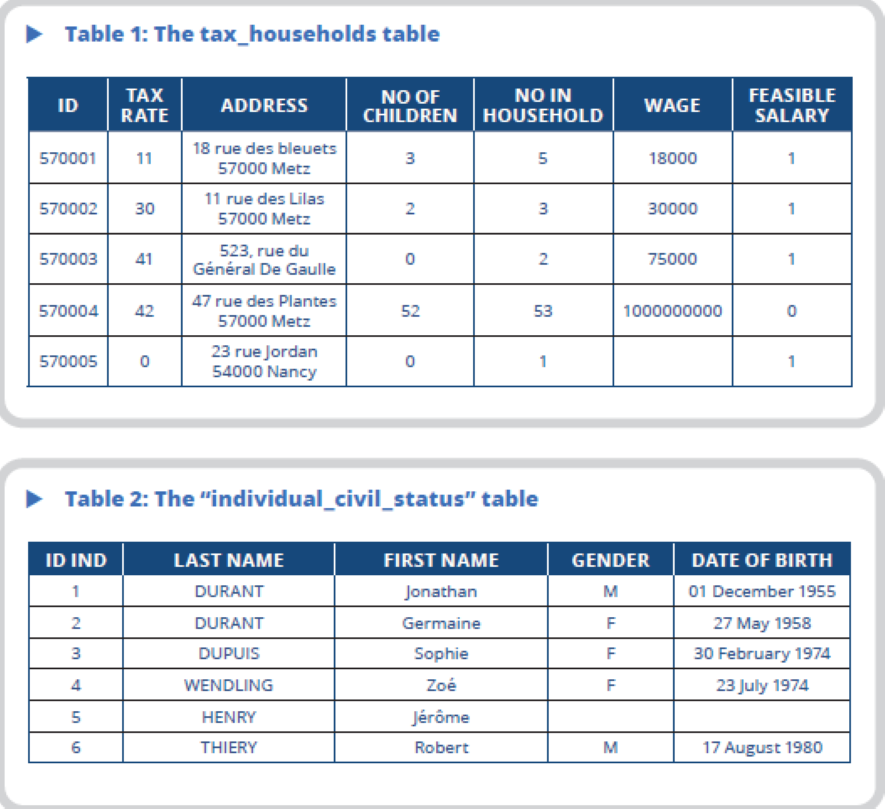

In the example, recoding has taken place and automatic derived variables have been created. They do not require any human input:

- coding of gender as: 1 = “M” and 2 = “F”, other = “blank”;

- calculation of a new variable (household_wage) in the tax_household table, corresponding to the sum of the wages of the declarants and their partner;

- calculation of a variable allowing a judgement to be made as to the plausibility of the household’s wages. This variable is equal to 1 if the household’s wage is below EUR 10 million, otherwise it is 0. It should be noted that no correction is performed at this stage in the process. Manual and automatic adjustments will be made during subsequent processing phases. This calculation is not necessarily applied to all variables. In the example, the outlier of 52 children has been left. This value will be corrected during processing downstream of the integration phase;

- calculation of the number of members of the household, which is equal to the number of children + 2 if a partner is present and to the number of children + 1 if not;

- calculation of a “unique” variable for individuals. This variable is 1 if the individual’s identifier is only present once in the individual_wage table, otherwise it is 0.

The tables obtained following this integration phase are as follows:

Incorporating filters

The coverage of the input source may be significantly broader than the coverage that is of statistical interest. In this case, if the source contains variables that allow the population of interest to be filtered, it is possible to do this at the integration stage to reduce the size of the statistical database. This also allows for compliance with the principles of minimisation and necessity under which all statisticians are compelled to restrict themselves, to the maximum extent possible, to using only the data they actually require. An example of this would be removing commercial premises from an administrative file when we are only interested in residential premises.

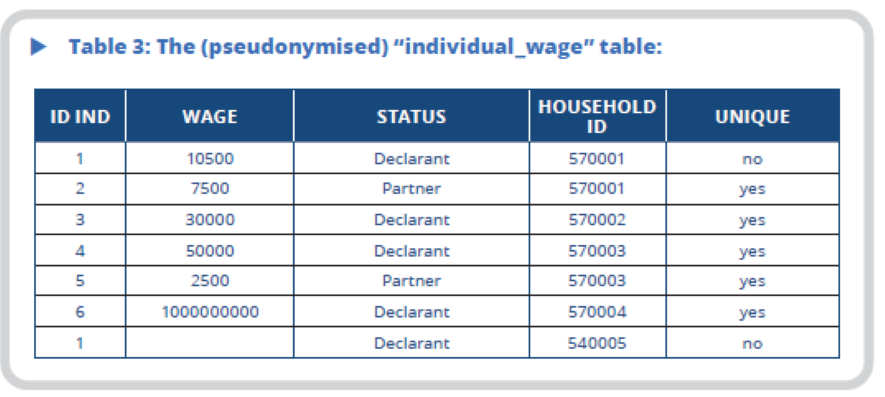

In the example, the rows of duplicate individuals with a missing wage value are not of interest. The unique variable is recalculated based on this new table.

The final individual_wage table is therefore as follows:

Establishing the data pipeline

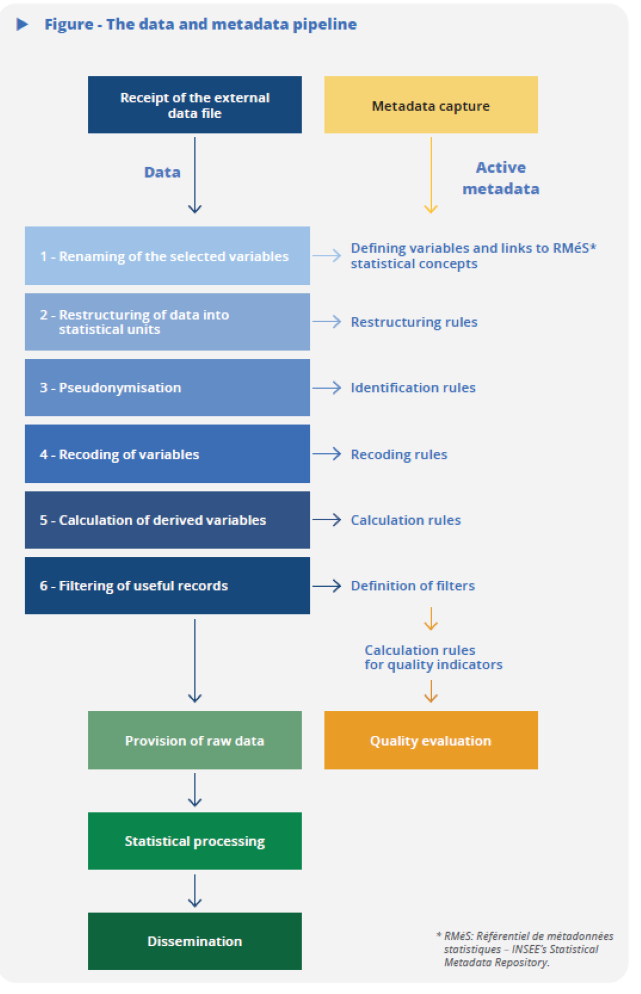

The source integration phase must be fully automated and reproducible. It involves the establishment of a general framework to ensure a systematic approach to data structured by an external producer. It must result in the establishment of a pipeline complete with checkpoints to ensure that the sequence of tasks is progressing correctly and to halt the process as soon as a potential issue is encountered.

The aim of this industrialisation is to establish a sequence of generic, customizable processing activities that are as independent as possible from the input source. Integrating a new source (or updating an existing source) is therefore a case of describing the input source, the desired output data model and the transformations required in order to turn one into the other. The data integration step sequence then takes place automatically based on these metadata, which become active metadata. As a result, by specifying the expected representation of a variable (M, F for the gender variable, for example), it is possible to automatically generate value-checking processing activities.

Under these conditions, some of the more precise metadata are of particular interest when it comes to transforming data. Upstream, the documentation of processing activities may extend to their specification in a more or less formal language, such as BPMN or directed acyclic graph (DAG) for the process as a whole and SQL or VTL (Box 2) for the transformations themselves. This offers the advantage of allowing for the full or partial automation of the process where active metadata are involved. The specification in VTL is a form of metadata (it describes the transformation in a language that can be understood by statisticians) that is then implemented automatically using IT tools, which then makes it directly active. Downstream, accurate tracing of operations (which variables were used to calculate another variable, for example) allows for improved process control and promotes reproducibility and transparency. This is known as metadata provenance or lineage.

Box 2 : VTL, a language for validating and transforming data

VTL (Validation and Transformation Language) is a language used to specify processing for the validation and transformation of data developed within the framework of the SDMX* standard used to exchange statistical data and metadata. Intended for statisticians, it provides a neutral view (independent of technical implementation) of the data process at the business level. As a specification language, VTL is sufficiently rich and expressive to allow for the definition of relatively complex processing activities.

VTL has characteristics that make it especially interesting for use in the context of the industrialisation and automation of statistical processes. VTL is therefore suitable for the integration of external sources.

First, since VTL is positioned at the logical level, which sits between design and implementation, it is not directly executable, as would be the case with Java, R or Python. VTL expressions must be transmitted to an engine, which then executes them on a lower-level platform, such as Java, Python or C#. This allows for a clear separation of concerns** between the statistician who focuses on the specifications for processing and the computer scientist who is responsible for implementation. In directly executable languages, the logical formulation of processing is often embedded in the implementation details and difficult to reconstruct. With VTL, the specification is treated as an object in its own right, which can therefore be managed, versioned, traced, shared, documented, etc.

Another useful property of VTL is that it is based on a data model that is derived from international standards (GSIM, SDMX, DDI***) and that is suitable for statistical use and for different types of data (detailed, aggregated, qualitative, quantitative, etc.). At the core of this model is the Data Set, made up of components (columns within a tabular file) that play different roles (identifiers, measurements and attributes), and rows (Data Points). This model allows for the simplification of expressions: therefore, if a sum is performed on a data set, there is no point in specifying that the operation only applies to measurements and not to identifiers or attributes.

Lastly, VTL is described by a formal grammar, which ensures the logical foundation of the language and allows it to be used in an automated manner, particularly through the construction of tools such as editors or execution engines for different technical platforms. This ensures that the same expression will be executed in a consistent manner in different, lower-level languages.

Many tools have been developed on the basis of VTL by statistical and banking communities. The most active players are the Bank of Italy, INSEE and private companies.

* SDMX stands for Statistical Data and Metadata eXchange.

** Ouvrir dans un nouvel onglethttps://en.wikipedia.org/wiki/Separation_of_concerns.

*** GSIM stands for Generic Statistical Information Model and DDI stands for Data Documentation Initiative.

These active metadata allow the designer to work as independently as possible and to more easily adapt their pipeline to changes in the external sources. For example, the Directorate-General for Public Finance (Direction générale des finances publiques – DGFiP) recently changed the way that it registers residential outbuildings in its information system: in the past they were linked to a main premises, whereas they are now considered to be dwellings in their own right. Updates such as these therefore require a revision of the filter used to define coverage or even the addition of new variables. Using active metadata, statisticians can create a new variable within the host data model in RMéS (Bonnans, 2019), which makes it possible to identify outbuildings, establish a method for calculating this new variable within ARC using the variables within the DGFiP file, and modify the VTL script used to define its coverage to take account of this new variable. A statistician is then completely free to modify their pipeline, to take into account the changes to the structure of the input file and to trace these.

In the pipeline relating to the different stages of data integration (figure), the statistician enters the metadata upstream (definition of variables, link to a statistical concept, transformation rule, etc.). The step sequence then progresses automatically based on these metadata, resulting in standardisation and a clear separation between logical specification and technical implementation, and enabling greater autonomy for the statistician.

Figure - The data and metadata pipeline

As a result of this process, the administrative data are integrated into the information

system in the form of raw data. In addition, a quality assessment is produced in parallel

to provide initial quality indicators (number of records, partial non-response rate,

etc.), which give initial insights into the quality of the raw data.

Integrating data is good, but with metadata it is even better…

As is the case at any stage of the statistical process, the existence of complete and accurate metadata when transforming data is key to ensuring that operations run smoothly. Moreover, as transformation-related processing often takes place at the very beginning of the process, it is necessary to capture or create the metadata that will be reused in subsequent phases.

As a general rule, administrative data are described with their own metadata. Some may be used, but not necessarily all of them. In addition, the statistical metadata resulting from the integration process differ from the original data (the classification may be different, the associated statistical concepts are specific to statistical data, etc.).

Different types of metadata are of particular importance when it comes to transforming data.

Metadata can themselves be subject to transformation alongside the transformation of data. For example, the renaming of variables can be seen as a transformation of metadata.

The structural metadata, i.e. the definition of the variables used, the statistical concepts to which they relate, the type and the value domain, which are occasionally limited by code lists, must be defined at the earliest possible opportunity. It is important that they are available in formats that allow their use to be easily automated for the purposes of validating the processing performed. Descriptive metadata are another important category of metadata. They store all of the information necessary to facilitate the discovery and understanding of the data and to evaluate their quality or suitability for a specific use, etc. The documentation detailing the processing carried out and the methods used, as well as the conditions under which data are provided, can also be considered descriptive metadata.

… with a quality measure performed at the earliest opportunity

Although the source has been qualified upstream and its quality deemed sufficient for the production of statistics, it is important to have an initial measure of the quality of the data as soon as they are integrated (Ouvrir dans un nouvel onglet Six and Kowarik, 2022, UNECE Statswiki Quality). This provides an initial idea of the intrinsic quality of the source and allows an ongoing quality improvement process to be initiated at the earliest opportunity. This phase also allows users to familiarise themselves with new sources and better understand their structure and content.

In terms of integration, this quality measure (commonly referred to as data profiling in the international literature) consists of defining indicators that will provide an initial measure of the quality of the source and make it possible to monitor its development over time, by comparing successive deliveries from the source (monitoring). The following are some examples of these indicators:

- the number of records received for each type of statistical unit;

- the total variables of interest;

- the partial non-response rate for each variable;

- the frequency of categories in the case of qualitative variables, used to identify categories that are rarely or never used;

- the distribution and detection of outliers;

- the identification of codes that have not been used for variables associated with a nomenclature;

- the identification of duplicates, etc.

The analysis of these indicators will often require a request for clarification from the producer, at least the first few times that files are received. This may also result in the producer introducing checks into their own process in order to improve the quality of data collection. For example, the work carried out at INSEE during the receipt of the Nominative Social Declaration (Déclaration sociale nominative – DSN) resulted in the GIP-MDS adding an NIR check into its DSN data collection interface, which significantly improved the quality of the NIR within the DSN. Such indicators can be used to identify at the earliest opportunity whether the data provided are incomplete when the number of records or the totals for certain variables are lower than in previous deliveries. These indicators are also used to identify problems that can be corrected by subsequent processes (e.g. the quality indicator for the household wage, which flags the suspect wage of one billion euro). These quality indicators will therefore be useful to the user of the raw data when they come to manage their own processing activities.

Given the critical nature of this phase, specific tools have been developed.

Perspectives

The integration of administrative data into a statistical process is a key phase, the importance of which is recognised at international level (Box 3). It must be carefully identified and specified and the right tools must be used. It represents a change to the nature and “owner” of the data, which then becomes statistical and subject to the associated legal, methodological and organisational framework.

Box 3. Data transformation: the international framework

The international cooperation work aimed at the modernisation of official statistics* led by the UNECE initially focused on a process-oriented vision with the GSBPM model in particular, followed by the lesser-known GSDEM (2015). Data-related aspects have been brought into the spotlight in the context of various initiatives, initially by the UNECE:

- “Data Integration” project (2016): placed the emphasis on the integration of data (after reflections on Big Data), defined as the activity of combining at least two different data sources into a data set. In this regard, data transformation is not defined as a step in itself, since the focus is more on matching methods;

- design of a Common Statistical Data Architecture (CSDA** 2017 – 2018), which aims to “[support] statistical organisations in the design, collection, integration, production and dissemination of official statistics based on both traditional and new types of data sources”. It is based on principles common to modern data-oriented approaches (data as an asset, accessibility, reusability, use of standard models), and defines the capabilities required in order to use and manage statistical data and metadata. Data transformation is considered a high-level capability;

- “Data Governance Framework” project (2022, led by Mexico): it covers several of the areas discussed in this article, such as data governance, quality, metadata, etc.

The European Statistical System (ESS) is following a similar course:

- ESSnet ISAD (Integration of Survey and Administrative Data) project, completed in late 2008: mentions data transformation during the data preparation phase, prior to their integration (for example, through file matching);

- Then ESSnet “Data Integration” or “ADMIN” strategic project (ESS2020 ADMIN): the processing applied to transform the data sources is included in a data preparation phase that is not particularly detailed, though it is known to involve a significant amount of statistical work;

- ESSnet Big Data I and II (2015 to 2021): the elaborate business architecture identifies a data wrangling function, i.e. “the possibility of transforming data from the original source format into a desired target format that is better suited to analysis and subsequent processing,” and a data representation function, i.e. the addition of contextual and structural elements (derived data, codes, categories) to the raw data. These elements are positioned within a data convergence layer, between the raw data layer and the statistical data layer;

- “Trusted Smart Statistics” strategic approach: recent developments launched in this regard reuse the architecture defined by Big Data projects.

These various examples show that the issue of data transformation is clearly identified as such by international bodies.

* Ouvrir dans un nouvel onglethttps://unece.org/statistics/modernization-official-statistics.

**Ouvrir dans un nouvel onglethttps://statswiki.unece.org/display/DA/CSDA+2.0.

It must result in the implementation of a documented and reproducible pipeline of basic transformations; it is desirable to formally define it in a manner independent of any particular technology. When specifying this pipeline, it is important to take account of metadata, whether source metadata that may themselves be subject to transformation or those resulting from the integration process in the case of metadata provenance. As with any process, quality indicators must also be produced in order to check and improve processing.

Beyond administrative data, the use of external data for statistical purposes is expected to increase, and the establishment of a methodological framework and shared tools for data acquisition will make it possible to industrialise this function, as is already the case for surveys within INSEE (Cotton and Dubois, 2019) and (Koumarianos and Sigaud, 2019). As for the latter, the management of processes through the activation of metadata, which leads to standardisation and a clear separation of logical specification from technical implementation, affords the statistician greater autonomy and improves the responsiveness of systems, which is key to adapting to external changes.

The logical specification of integration-related processing, which is still largely organised in silos, will also allow it to be made more modular and easier to share. In this regard, consideration can be given to transferring certain steps upstream to the data producer (such as the performance of pseudonymisation or recoding via smartphones prior to the transfer of information). This issue is most commonly encountered in connection with smart statistics. Lastly, this automated data integration phase is becoming increasingly useful today due to the mass availability of proteiform data from sensors, digital tools and even electronic/social networks that reflect the social, environmental and economic reality. Whether they can be used to produce quality statistics remains to be seen.

Paru le :29/10/2024

Direction générale des finances publiques (Directorate-General for Public Finance): a directorate within the French central general government, which reports to the Ministry of the Economy.

Fichiers démographiques sur les logements et les individus (demographic files on dwellings and individuals)

GSBPM stands for Generic Statistical Business Process Model, a generic model that describes statistical production processes.

Metadata can be used for more than just their descriptive role through the use of a dedicated interface or client applications. These tools make it possible to take advantage of the comprehensive and standardised nature of metadata for the automatic production of components within the statistical process. Metadata then acquire a new status, shifting from “information facilitating the understanding of statistics” to “data participating in the production process”, hence the idea of active metadata (Bonnans, 2019).

ID number within the National Register for the Identification of Individuals (répertoire national des personnes physiques – RNIPP), more commonly referred to as the “social security number”.

See the article by Lionel Espinasse, Séverine Gilles and Yves-Laurent Bénichou on the Non-Significant Statistical Code (Code statistique non signifiant – CSNS) in this same issue.

PCS: Professions and Socio-professional Categories (Professions et Catégories Socioprofessionnelles)

For example, the day of birth is set to 00 rather than leaving it blank.

A distinction must be made between this step, which remains at the level of the representation of variables, and imputation processing, which takes place at a later stage of the process and is based on a statistical model. Let us consider that, for a given variable, there are several ways to represent the missing value (leaving it blank, adding 00, etc.). During integration, these values will be harmonised (all replaced with blank entries, for example). Their imputation does not take place until a subsequent phase.

The Business Process Model and Notation (BPMN) is the standard norm used to model the business process.

A directed acrylic graph (DAG) is a standard way of describing processes.

Référentiel de métadonnées statistiques – INSEE’s Statistical Metadata Repository.

Groupement d’intérêt public Modernisation des déclarations sociales (Public Interest Group for the modernisation of social declarations).

Pour en savoir plus

BONNANS, Dominique, 2019. RMéS: INSEE’s Statistical Metadata Repository. In : Courrier des statistiques. [online]. 27 June 2019. Insee. N° N2, pp. 46-57. [accessed 24 May 2023].

COTTON, Franck and DUBOIS, Thomas, 2019. Pogues, a Questionnaire Design Tool. In : Courrier des statistiques. [online]. 19 December 2019. N° N3, pp. 17-28. [accessed 24 May 2023].

COURMONT, Antoine, 2021. Quand la donnée arrive en ville. Open data et gouvernance urbaine. Presses universitaires de Grenoble. ISBN 2706147350.

CROS, 2014. Ouvrir dans un nouvel ongletHandbook on Methodology of Modern Business Statistics. In : site de Collaboration in Research and Methodology for Official Statistics. [online]. [accessed 24 May 2023].

DURR, Jean-Michel, DUPONT Françoise, HAAG, Olivier and LEFEBVRE, Olivier, 2022. Ouvrir dans un nouvel ongletSetting up statistical registers of individuals and dwellings in France: Approach and first steps. In : Statistical Journal of the IAOS (SJIAOS). Volume 38, n° 1, pp. 215-223. [online]. [accessed 24 May 2023].

Ouvrir dans un nouvel ongletESS Vision 2020 ADMIN (Administrative data sources). In : site de Collaboration in Research and Methodology for Official Statistics. [online]. [accessed 24 May 2023].

FERMOR-DUNMAN, Verena, and PARSONS Laura, 2022. Data Acquisition processes improving quality of microdata at the Office for National Statistics. Q2022 Vilnius.

HAND, David, 2018. Ouvrir dans un nouvel ongletStatistical challenges of administrative and transaction data. In : Journal of the Royal Statistical Society: Series A (Statistics in Society). 9 February 2018. Volume 181, N° 3, pp. 555-605. [online]. [accessed 24 May 2023].

KOUMARIANOS, Heïdi and SIGAUD, Éric, 2019. : Eno, a Collection Instrument Generator. In : Courrier des statistiques. [online]. 19 December 2019. N° N3, pp. 29-44. [accessed 24 May 2023].

LAMARCHE, Pierre and LOLLIVIER, Stéfan, 2021. FIDÉLI, The integration of tax sources into social data. In : Courrier des statistiques. [online]. 8 July 2021. Insee. N° N6, pp. 28-46. [accessed 24 May 2023].

SIX, Magdalena and KOWARIK, Alexander, 2022. Ouvrir dans un nouvel ongletQuality Guidelines for the Acquisition and Usage of Big Data. [online]. [accessed 24 May 2023].

UNECE Integration, statswiki, Ouvrir dans un nouvel ongletA Guide to Data Integration for Official Statistics. In : site statswiki de l’UNECE. [online]. [accessed 24 May 2023].

UNECE quality, statswiki, Ouvrir dans un nouvel ongletQuality. In : site statswiki de l’UNECE. [online]. [accessed 24 May 2023].

Ouvrir dans un nouvel ongletUNECE GSBPM. In : site statswiki de l’UNECE. [online]. [accessed 24 May 2023].